A team of researchers from the University of California, San Diego, the Universidad Nacional de La Plata and the Kavli Institute for Brain and Mind has demonstrated a vocal synthesizer for birdsong, realized by mapping brain activity recorded from electrode arrays implanted into bird brain tissue. The team was able to reproduce the complex vocalizations of zebra finches (Taeniopygia guttata) down to the pitch, volume and timbre of the original. The results, published June 16, 2021 in the journal Current Biology, provide proof of concept that high-dimensional, complex natural behaviors can be directly synthesized from ongoing neural activity; this may inspire similar approaches to prosthetics in humans and other species by exploiting knowledge of the peripheral systems and the temporal structure of their output.

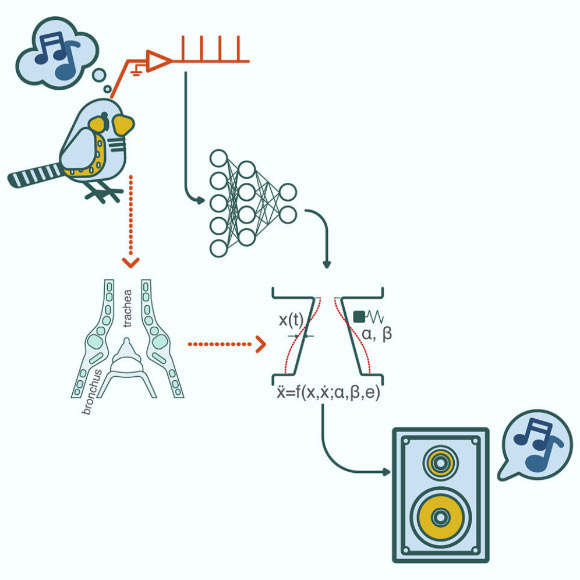

Arneodo et al. demonstrate the possibilities of a future speech prosthesis for humans. Image credit: Arneodo et al., doi: 10.1016/j.cub.2021.05.035.

“The current state of the art in communication prosthetics is implantable devices that allow you to generate textual output, writing up to 20 words per minute,” said University of California, San Diego’s Professor Timothy Gentner.

“Now imagine a vocal prosthesis that enables you to communicate naturally with speech, saying out loud what you’re thinking nearly as you’re thinking it. That is our ultimate goal, and it is the next frontier in functional recovery.”

“In many people’s minds, going from a songbird model to a system that will eventually go into humans is a pretty big evolutionary jump,” added University of California, San Diego’s Professor Vikash Gilja.

“But it’s a model that gives us a complex behavior that we don’t have access to in typical primate models that are commonly used for neural prosthesis research.”

The researchers implanted silicon electrodes in male adult zebra finches and monitored the birds’ neural activity while they sang.

Specifically, they recorded the electrical activity of multiple populations of neurons in the sensorimotor part of the brain that ultimately controls the muscles responsible for singing.

The scientists fed the neural recordings into machine learning algorithms.

The idea was that these algorithms would be able to make computer-generated copies of actual zebra finch songs just based on the birds’ neural activity.

But translating patterns of neural activity into patterns of sounds is no easy task.

“There are just too many neural patterns and too many sound patterns to ever find a single solution for how to directly map one signal onto the other,” Professor Gentner said.

To accomplish this feat, the team used simple representations of the birds’ vocalization patterns.

These are essentially mathematical equations modeling the physical changes — that is, changes in pressure and tension — that happen in the finches’ vocal organ, called a syrinx, when they sing.

The authors then trained their algorithms to map neural activity directly to these representations.

This approach is more efficient than having to map neural activity to the actual songs themselves.

“If you need to model every little nuance, every little detail of the underlying sound, then the mapping problem becomes a lot more challenging,” Professor Gilja said.

“By having this simple representation of the songbirds’ complex vocal behavior, our system can learn mappings that are more robust and more generalizable to a wider range of conditions and behaviors.”

The team’s next step is to demonstrate that their system can reconstruct birdsong from neural activity in real time.

_____

Ezequiel M. Arneodo et al. Neurally driven synthesis of learned, complex vocalizations. Current Biology, published online June 16, 2021; doi: 10.1016/j.cub.2021.05.035